In this tutorial, we’ll explore how to generate Epic AI music for Free using the Audiocraft MusicGen library in Google Colab. Audiocraft’s MusicGen, developed by Facebook Research, offers several pre-trained models that can create various styles of melodies based on textual prompts. MusicGen leverages powerful AI technology to generate high-quality tunes in numerous genres, making it a valuable tool for musicians, composers, and anyone interested in music creation.

MusicGen provides a simple and intuitive interface for generating music. It is built on state-of-the-art deep learning models and can produce music that spans different styles and moods. Whether you’re looking to create an epic battle theme, a melancholic piano ballad, or animated lo-fi chill beats, MusicGen can help you achieve your creative goals.

For more information, you can check out the Audiocraft GitHub repository and the MusicGen documentation.

Step-by-Step Guide

1. Setting Up the Environment

First, we’ll need to install the Audiocraft library. Open a new Google Colab notebook and run the following command:

!pip install -U git+https://github.com/facebookresearch/audiocraft2. Importing Necessary Libraries

Next, we’ll import the necessary libraries and modules for our music generation:

from audiocraft.models import musicgen

from IPython.display import Audio

from audiocraft.data.audio import audio_write3. Loading the Pre-trained Model

Audiocraft offers several pre-trained models designed to cater to different needs and computational resources. Let’s take a look at the available models:

- melody: This model is optimized for generating melodies. It focuses on producing coherent and musically pleasing melodic lines.

- facebook/musicgen-small: This is a lightweight model suitable for quick experiments and generating shorter music clips. It is less resource-intensive and faster to run.

- facebook/musicgen-medium: A balanced model that provides a good trade-off between quality and computational requirements. It is ideal for most general purposes.

- facebook/musicgen-large: The most advanced and resource-intensive model. It produces the highest quality music with more intricate details and longer compositions.

- facebook/musicgen-stereo-X: Similar to the small, medium or large models but optimized for stereo sounds. This model generates richer and more immersive audio experiences.

For this tutorial, we’ll use the musicgen-medium model. You can also experiment with other models by uncommenting the corresponding lines:

# model = musicgen.MusicGen.get_pretrained('melody', device='cuda')

# model = musicgen.MusicGen.get_pretrained('facebook/musicgen-small', device='cuda')

model = musicgen.MusicGen.get_pretrained('facebook/musicgen-medium', device='cuda')

# model = musicgen.MusicGen.get_pretrained('facebook/musicgen-stereo-medium', device='cuda')

# model = musicgen.MusicGen.get_pretrained('facebook/musicgen-large', device='cuda')4. Defining Variables

We can define the duration of the generated music. In this example, we’ll set it to 45 seconds. Additionally, we’ll prepare a list of prompts that describe the style of music we want to generate:

duration = 45 # duration in seconds

sampling_rate = model.sample_rate

musicPrompts = ["epic battle music", "piano melancholic ballad", "animated lofi chill beats"]

model.set_generation_params(duration=duration)5. Generating the AI Music

The generate function is the core of the music generation process. It takes a list of prompts and produces corresponding audio outputs. Here are some key parameters that can be used with this function:

- prompts: A list of textual descriptions that guide the music generation process.

- duration: The length of the generated music in seconds (set earlier using

set_generation_params). - progress: A boolean flag that, when set to True, displays a progress bar during the generation process.

In our case, we are using the prompts list and displaying the progress:

audioGenerations = model.generate(musicPrompts, progress=True)Additional parameters can be used to fine-tune the generation process, such as controlling the temperature (which affects the creativity of the output) or providing a specific seed for reproducibility.

6. Saving and Displaying the Generated MusicGen Music

After generating the music, we need to save the audio files and then display them using Python’s Audio widget:

for idx, audioGeneration in enumerate(audioGenerations):

audio_write(f'audio_{idx}', audioGeneration.cpu(), model.sample_rate, strategy="loudness", loudness_compressor=True)

display(Audio('/content/audio_0.wav', rate=sampling_rate))

display(Audio('/content/audio_1.wav', rate=sampling_rate))

display(Audio('/content/audio_2.wav', rate=sampling_rate))We should get a result similar to this ones:

Complete Code to generate Epic AI music for Free

Here is the complete code for generating music using Audiocraft’s MusicGen in Google Colab:

!pip install -U git+https://github.com/facebookresearch/audiocraft

from audiocraft.models import musicgen

from IPython.display import Audio

from audiocraft.data.audio import audio_write

# Uncomment the model you wish to use

# model = musicgen.MusicGen.get_pretrained('melody', device='cuda')

# model = musicgen.MusicGen.get_pretrained('facebook/musicgen-small', device='cuda')

model = musicgen.MusicGen.get_pretrained('facebook/musicgen-medium', device='cuda')

# model = musicgen.MusicGen.get_pretrained('facebook/musicgen-stereo-medium', device='cuda')

# model = musicgen.MusicGen.get_pretrained('facebook/musicgen-large', device='cuda')

duration = 45 # duration in seconds

sampling_rate = model.sample_rate

musicPrompts = ["epic battle music", "piano melancholic ballad", "animated lofi chill beats"]

model.set_generation_params(duration=duration)

audioGenerations = model.generate(musicPrompts, progress=True)

for idx, audioGeneration in enumerate(audioGenerations):

audio_write(f'audio_{idx}', audioGeneration.cpu(), model.sample_rate, strategy="loudness", loudness_compressor=True)

display(Audio('/content/audio_0.wav', rate=sampling_rate))

display(Audio('/content/audio_1.wav', rate=sampling_rate))

display(Audio('/content/audio_2.wav', rate=sampling_rate))Models Comparison

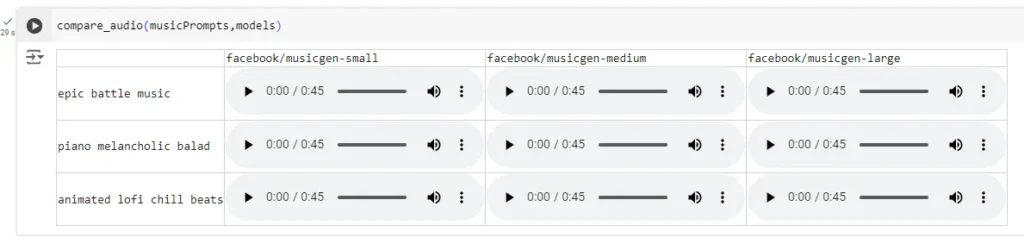

In this section, we will compare the three sizes of the MusicGen models to evaluate the differences in quality. We will use the following models:

facebook/musicgen-small: 300M model, text-to-music only – 🤗 Hubfacebook/musicgen-medium: 1.5B model, text-to-music only – 🤗 Hubfacebook/musicgen-large: 3.3B model, text-to-music only – 🤗 Hub

Function to Flush Cache

Due to the limitations of the Free Colab layer, we need to flush the cache periodically to manage memory usage effectively.

import gcimport torch

def flush_cache():

gc.collect()

torch.cuda.empty_cache()

flush_cache()Helper Function to Display Audios in a Comparative Grid

To facilitate a clear and structured comparison of the generated audio outputs from different models, we use Google Colab’s widgets module. This module provides a way to create interactive UI elements, such as grids, which are perfect for our needs.

The compare_audio function leverages these widgets to display the audio files in a comparative grid format. Let’s break down the function and explain each part.

from google.colab import widgets

from IPython.display import Audio

def compare_audio(promptsList, modelsList):

grid = widgets.Grid(len(promptsList)+1, len(modelsList)+1)

for i, (row, col) in enumerate(grid):

# Show model names in the header row

if (row == 0) & (col != 0):

print(modelsList[col-1])

# Show prompt names in the first column

elif (row != 0) & (col == 0):

print(promptsList[row-1])

# Display the audio content

elif (row != 0) & (col != 0):

audio_file = f'/content/audio_{modelsList[col-1].split("/")[-1]}_{row-1}.wav'

display(Audio(audio_file))Understanding Colab Widgets

Colab’s widgets module allows you to create interactive elements like buttons, sliders, and grids. In this case, we use the Grid widget to create a structured layout for displaying our audio files.

- Grid Layout: The

Gridwidget is initialized with a specified number of rows and columns. The size of the grid is determined by the number of prompts plus one (for headers) and the number of models plus one (for headers). - Interactive Display: The grid allows us to systematically arrange and display our elements, making the comparison between different models straightforward.

Generating Audio for Each Model and Each Prompt

We’ll generate music for each prompt using each model to understand the differences in audio quality produced by various models. We begin by defining the duration for the generated audio, the prompts, and the models we want to compare.

Here’s a detailed breakdown of the process:

duration = 45 # duration in seconds

musicPrompts = [

"epic battle music",

"piano melancholic balad",

"animated lofi chill beats"

]

models = [

'facebook/musicgen-small',

'facebook/musicgen-medium',

'facebook/musicgen-large'

]

audio_table = {}

for model_name in models:

model = musicgen.MusicGen.get_pretrained(model_name, device='cuda')

model.set_generation_params(duration=duration)

sampling_rate = model.sample_rate

audioGenerations = model.generate(musicPrompts, progress=True)

audio_table[model_name] = audioGenerations

flush_cache()Breakdown of the Process:

- Define Duration and Prompts:

duration = 45 # duration in seconds

musicPrompts = [

"epic battle music",

"piano melancholic balad",

"animated lofi chill beats"

]- We set the duration for each generated audio clip to 45 seconds.

- We define a list of prompts that describe the type of music we want to generate. These prompts will be used by the models to create the music.

Define Models:

models = [

'facebook/musicgen-small',

'facebook/musicgen-medium',

'facebook/musicgen-large'

]- We specify the models that we want to compare. Each model has different parameters, such as size and complexity, which can affect the quality of the generated audio.

- Initialize Audio Table:

audio_table = {}- We initialize a dictionary to store the generated audio for each model.

- Generate Audio for Each Model:

for model_name in models:

model = musicgen.MusicGen.get_pretrained(model_name, device='cuda')

model.set_generation_params(duration=duration)

sampling_rate = model.sample_rate

audioGenerations = model.generate(musicPrompts, progress=True)

audio_table[model_name] = audioGenerations

flush_cache()- For each model, we load the pre-trained model using the

get_pretrainedmethod. - We set the generation parameters, including the duration.

- We generate the audio for the given prompts and store the results in the

audio_tabledictionary. - We flush the cache after processing each model to manage memory usage effectively.

Saving and Displaying the Generated Audio

After generating the audio files, we need to save them to the disk and display them in a comparative grid. This involves writing the audio data to files and then using the previously defined compare_audio function to display the results.

Here’s how we do it:

# Save and display the generated audios

for model_name, audioGenerations in audio_table.items():

for idx, audioGeneration in enumerate(audioGenerations):

audio_write(f'audio_{model_name.split("/")[-1]}_{idx}', audioGeneration.cpu(), sampling_rate, strategy="loudness", loudness_compressor=True)

compare_audio(musicPrompts, models)Breakdown of the Process:

- Save Generated Audios:

- We iterate over the generated audio data stored in the

audio_table. - For each model and its corresponding generated audios, we save the audio data to disk using the

audio_writefunction. - The filenames are constructed dynamically based on the model name and the prompt index to ensure they are unique and identifiable.

- We iterate over the generated audio data stored in the

- Display the Results:

- After saving the audio files, we call the

compare_audiofunction to display the audio files in a comparative grid format. - This function organizes the audio files into a clear and interactive grid, making it easy to compare the outputs from different models.

- After saving the audio files, we call the

Displaying the Results

Finally, we use the compare_audio function to show the results in a comparative grid format.

compare_audio(musicPrompts, models)The compare_audio function will give us a result similar to this one:

Conclusion

In this blog post, we explored the process of generating music using Facebook’s MusicGen models. We covered the installation and setup of the Audiocraft library, the generation of music using predefined prompts, and the comparison of different model sizes to evaluate the quality of their outputs.

Key Takeaways:

- Setup and Installation:

- We installed the Audiocraft library and imported necessary modules to get started with MusicGen.

- Music Generation:

- Using predefined prompts like “epic battle music,” “piano melancholic ballad,” and “animated lofi chill beats,” we generated music tracks with different models.

- Model Comparison:

- We compared the outputs from three different models:

musicgen-small,musicgen-medium, andmusicgen-large. This comparison helped us understand how the size and complexity of the models impact the quality of the generated music.

- We compared the outputs from three different models:

- Managing Colab Resources:

- We discussed the importance of managing memory resources effectively in Google Colab by flushing the cache between model generations.

- Displaying Results:

- We created a comparative grid using Colab widgets to display and listen to the generated audio files. This interactive approach made it easier to compare and evaluate the different models.

Conclusion:

The MusicGen models provide a powerful tool for generating high-quality music from text prompts. By comparing different model sizes, we observed that larger models tend to produce more sophisticated and detailed audio outputs. However, even the smaller models can generate impressive music, making them suitable for various use cases depending on resource availability and quality requirements.

This experiment highlights the potential of AI in creative fields like music production, where it can assist artists and creators in exploring new possibilities. Whether you’re a musician looking for inspiration or a developer interested in AI-generated content, MusicGen offers a fascinating glimpse into the future of music creation.

Now you have the means to generate AI background music in Colab! Remember that you can follow our previous tutorial about How to generate AI Images in 10 lines of python to learn how to generate AI images!

In our next post, we will show you how you can create YouTube shorts or Instagram reels mixing both, AI images and background AI music!

Happy coding!