In this guide, we’ll walk you through the process of generating automated AI Videos for YouTube shorts and Instagram reels using AI tools to create background images and music. We will leverage the capabilities of Stable Diffusion, Hugging Face’s Diffusers and Transformers libraries, and the Moviepy library to put everything together. Let’s get started!

Step 1: Install Necessary Libraries

First, we need to install all the required libraries. Run the following commands in your Colab notebook:

pythonCopiar código

!pip install --upgrade \\

diffusers \\

transformers \\

safetensors \\

sentencepiece \\

accelerate \\

bitsandbytes \\

!pip install -U git+https://github.com/facebookresearch/audiocraft

NOTE: The colab notebook may ask you to restart the environment after this step. Do it before continue.

Step 2: Generate a Background Image

We will use the Stable Diffusion model to create a high-quality background image. Read our first blog post for more detailed information about generating ai images in 10 lines of Python.

from diffusers import DiffusionPipeline, EulerAncestralDiscreteScheduler

import torch

# Load the diffusion pipeline

pipe = DiffusionPipeline.from_pretrained('Alkimia/Dark-Sushi-2.5D',

torch_dtype=torch.float16,

safety_checker=None,

)

pipe.scheduler = EulerAncestralDiscreteScheduler.from_config(pipe.scheduler.config)

pipe.to("cuda")

# Define the image generation prompt

prompt = "masterpiece, best quality, (1girl), woman, small breasts, slim body, full body, green hair, short hair, messy hair light green eyes, large black hoodie, pink sweatpants"

negative_prompt = "nude, bad anatomy, blurry, fuzzy, extra legs, extra arms, extra fingers, badly drawn hands, poorly drawn feet, disfigured, out of frame, tiling, terrible art, deformed, mutated, CGI, octane, render, 3d, doll"

# Generate the image

images = pipe(prompt=prompt, negative_prompt=negative_prompt, height=1024, width=576, guidance_scale=8, num_inference_steps=30).images[0]

images.save("image_out.jpeg")

Step 3: Generate Background Music

Next, we will use the MusicGen model from Hugging Face to create background music. If you want more detailed information about generating AI music with Musicgen, visit our previous blog post

pythonCopiar código

from audiocraft.models import musicgen

from IPython.display import Audio

from audiocraft.data.audio import audio_write

# Load the MusicGen model

model = musicgen.MusicGen.get_pretrained('facebook/musicgen-medium', device='cuda')

# Define music generation parameters

duration = 30 # duration in seconds

musicPrompts = ["happy piano tune"]

model.set_generation_params(duration=duration)

sampling_rate = model.sample_rate

# Generate the music

audioGenerations = model.generate(musicPrompts, progress=True)

audio_write("musicgen_out", audioGenerations[0, 0].cpu(), model.sample_rate, strategy="loudness", loudness_compressor=True)Step 4: Create the automated AI Videos

Now, let’s combine the generated image and music to create automated AI videos using OpenCV and moviepy.

MoviePy is a versatile Python library designed for video editing. It allows users to perform various video manipulations, such as cutting, concatenating, and adding effects to video clips. With MoviePy, you can easily handle tasks like resizing videos, applying filters, and overlaying text or images. One of its standout features is the ability to work with audio, making it simple to add background music or synchronize sound with video footage. MoviePy is particularly user-friendly and integrates seamlessly with other libraries like OpenCV, making it a powerful tool for beginners and experienced developers looking to create and edit video content programmatically.

This code snippet demonstrates how to create a video from a single image and add background music to it using Python libraries cv2 (OpenCV) and moviepy.

import cv2

from moviepy.editor import *

# Define video parameters

fps = 10

duration = 30

image_path = "image_out.jpeg"

audio_path = "musicgen_out.wav"

output_video = "video_out.mp4"

# Load the image

frame = cv2.imread(image_path)

height, width, channels = frame.shape

# Initialize the video writer

video_writer = cv2.VideoWriter(output_video, cv2.VideoWriter_fourcc(*'mp4v'), fps, (width, height))

# Write the frames to the video

frames_per_image = fps * duration

for _ in range(frames_per_image):

video_writer.write(frame)

video_writer.release()

print(f"Video {output_video} created successfully")

# Load the video and audio

mainVideo = VideoFileClip(output_video)

audio = AudioFileClip(audio_path).set_duration(mainVideo.duration).set_start(0)

# Add the audio to the video

video_with_audio = mainVideo.set_audio(audio)

# Export the final video

video_with_audio.write_videofile("final_video.mp4")

print(f"Final video with audio created successfully")This script is a straightforward way to create a video from a single image and overlay it with audio, useful for generating content such as YouTube Shorts or other social media posts where static images with background music can be engaging.

It should give you a result similar to this:

Let’s Spice it a little bit

Let’s make our example a little bit more interesting and create better automated AI videos adding some new features that will make our automation process feel more unique and versatile. We will add the following functionalities to our video creation workflow:

- Generate multiple images per video with some degree of variation

- Add some camera movement

- Add a subscribe video animation

- Generate a batch of automated AI videos for every execution

For a more complex example, we’ll add randomness to the images, use multiple images in one video, and add a camera zoom effect.

Detailed Breakdown

1. Import Libraries and Define Prompt Options

Before diving into the functions and main loop, we need to import the necessary libraries and define the options for generating diverse prompts.

import math

import numpy as np

import cv2

from moviepy.editor import *

import os

import gc

import torch

from random import randint

from IPython.display import Audio

from transformers import AutoProcessor

from audiocraft.models import musicgen

from audiocraft.data.audio import audio_writeDefine Prompt Options

These lists provide various attributes to create diverse prompts for generating images

age_options = ["girl", "woman", "auntie", "middle-aged woman"]

physique_options = ["scrawny", "slim", "thin", "sexy", "gorgeous", "elegant", "chubby", "muscular", "buff", "big body", "large"]

breasts_options = ["flat chest", "small breasts", "medium breasts", "big breasts"]

hair_options = ["pixiecut", "bob", "long", "short", "long locks", "hair bun", "double bun", "braided hair", "braided bun", "hair drill", "pigtail", "twin tails", "ponytail"]

eye_options = ["black", "brown", "blue", "green", "orange", "red", "yellow", "light purple"]

hair_color_options = ["black", "brown", "blonde", "red", "green", "blue", "purple", "pink", "orange"]

emotion_options = ["smile", "expressionless", "sad", "excited", "embarrassed", "nervous", "shy"]

arms_options = ["hand on face", "hand on hip", "hand in pocket", "hand over head", "arms crossed", "finger on lip"]

looking_options = ["looking over back/shoulder", "looking into distance", "looking at viewer"]

view_options = ["top down view", "side view", "back view"]

camera_options = ["close up", "cowboy shot", "mid shot", "full body", "dutch angle shot", "fisheye shot"]

action_options = ["standing", "sitting in a bench", "sitting in a fence", "lying in her bed", "walking on the street", "playing in a park", "posing", "jumping"]

time_of_day_options = ["at sunrise", "at day time", "in the evening", "at sunset", "at night"]

clothes_options = ["maid dress", "red dress", "elegant dress", "black hoodie", "green t-shirt", "white t-shirt", "pink hoodie", "big sweater", "purple wool jumper"]

music_prompt_list = ["lofi happy tune", "lofi 60bpm beat song", "lofi animated beats, chill hiphop", "lofi romantic melody, epic romantic love", "lofi sad missing you tune", "lofi nostalgia serenade, missing you", "lofi music for cold days and nights", "lofi relaxing tunes", "lofi study concentration music", "lofi nostalgia relax and melancholy"]Base and Negative Prompts

Define the base prompt template and the negative prompt to filter out unwanted features.

base_prompt = "best image, detailed image, 8k, 1girl, CAMERA_OPTIONS, HAIR_COLOR_OPTIONS HAIR_OPTIONS hair girl, EYE_OPTIONS eyes, (wearing a CLOTHES_OPTIONS:1.3), ACTION_OPTIONS, TIME_OF_DAY_OPTIONS"

negative_prompt = "nude, bad anatomy, blurry, fuzzy, extra legs, extra arms, extra fingers, badly drawn hands, poorly drawn feet, disfigured, out of frame, tiling, terrible art, deformed, mutated, cgi, octane, render, 3d, doll"2. Define Helper Functions

Function: generatePrompts

This function generates a random prompt using the predefined options. The basic idea is to replace the basic_prompt placeholders for a random option of the defined lists:

def generatePrompts():

age_value = randint(0, len(age_options)-1)

breasts_value = randint(0, len(breasts_options)-1)

physique_value = randint(0, len(physique_options)-1)

emotion_value = randint(0, len(emotion_options)-1)

camera_value = randint(0, len(camera_options)-1)

hair_color_value = randint(0, len(hair_color_options)-1)

hair_value = randint(0, len(hair_options)-1)

eye_value = randint(0, len(eye_options)-1)

clothes_value = randint(0, len(clothes_options)-1)

action_value = randint(0, len(action_options)-1)

time_of_day_value = randint(0, len(time_of_day_options)-1)

prompt = base_prompt

prompt = prompt.replace("AGE_OPTIONS", age_options[age_value])

prompt = prompt.replace("BREASTS_OPTIONS", breasts_options[breasts_value])

prompt = prompt.replace("PHYSIQUE_OPTIONS", physique_options[physique_value])

prompt = prompt.replace("EMOTIONS_OPTIONS", emotion_options[emotion_value])

prompt = prompt.replace("CAMERA_OPTIONS", camera_options[camera_value])

prompt = prompt.replace("HAIR_COLOR_OPTIONS", hair_color_options[hair_color_value])

prompt = prompt.replace("HAIR_OPTIONS", hair_options[hair_value])

prompt = prompt.replace("EYE_OPTIONS", eye_options[eye_value])

prompt = prompt.replace("CLOTHES_OPTIONS", clothes_options[clothes_value])

prompt = prompt.replace("ACTION_OPTIONS", action_options[action_value])

prompt = prompt.replace("TIME_OF_DAY_OPTIONS", time_of_day_options[time_of_day_value])

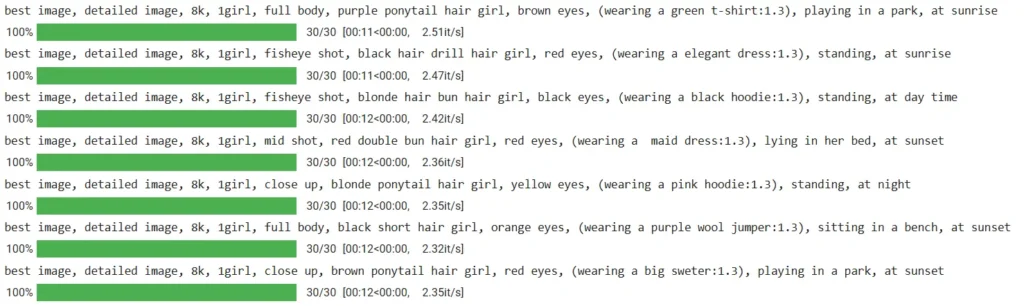

return promptThe generatePrompt function return structured semi randomized prompts useful to create variations in our automated AI videos:

Function: flush

This function clears the GPU memory to ensure smooth performance.

def flush():

gc.collect()

torch.cuda.empty_cache()Function: Zoom

This function applies a zoom effect to the automated AI videos. There are multiple ways to generate camera movement in moviepy but I found this function in this github issues comment and I works perfectly!

def Zoom(clip, mode='in', position='center', speed=1):

fps = clip.fps

duration = clip.duration

total_frames = int(duration * fps)

def main(getframe, t):

frame = getframe(t)

h, w = frame.shape[:2]

i = t * fps

if mode == 'out':

i = total_frames - i

zoom = 1 + (i * ((0.1 * speed) / total_frames))

positions = {

'center': [(w - (w * zoom)) / 2, (h - (h * zoom)) / 2],

'left': [0, (h - (h * zoom)) / 2],

'right': [(w - (w * zoom)), (h - (h * zoom)) / 2],

'top': [(w - (w * zoom)) / 2, 0],

'topleft': [0, 0],

'topright': [(w - (w * zoom)), 0],

'bottom': [(w - (w * zoom)) / 2, (h - (h * zoom))],

'bottomleft': [0, (h - (h * zoom))],

'bottomright': [(w - (w * zoom)), (h - (h * zoom))]

}

tx, ty = positions[position]

M = np.array([[zoom, 0, tx], [0, zoom, ty]])

frame = cv2.warpAffine(frame, M, (w, h))

return frame

return clip.fl(main)3. Main Loop to Create Videos

This section of the code combines everything to generate multiple automated AI videos with various images and background music.

numFinalVideos = 2

numImagesPerVideo = 7

fps = 25

duration = 30

add_bg_Video = False # Set to True to add a "subscribe animation" to your video

bg_video = "subscribe_shorts.mov"

# Main loop to create automated AI videos

for x in range(numFinalVideos):

imagesList = []

videoClips = []

for y in range(numImagesPerVideo):

prompt = generatePrompts()

print(prompt)

images = pipe(prompt=prompt, negative_prompt=negative_prompt, height=1024, width=576, guidance_scale=8, num_inference_steps=30).images[0]

img_name = "image_" + str(x) + "_" + str(y) + ".jpeg"

imagesList.append(img_name)

images.save(img_name)

model.set_generation_params(duration=duration)

musicPrompt = randint(0, len(music_prompt_list) - 1)

audioGenerations = model.generate([music_prompt_list[musicPrompt]], progress=True)

audio_name = "musicgen_" + f"{x:02d}"

audio_write(audio_name, audioGenerations[0, 0].cpu(), model.sample_rate, strategy="loudness", loudness_compressor=True)

final_video = "final_video_" + f"{x:02d}" + ".mp4"

frame = cv2.imread(img_name)

height, width, channels = frame.shape

size = (width, height)

slides = []

for n, url in enumerate(imagesList):

slides.append(

ImageClip(url).set_fps(10).set_duration(duration / numImagesPerVideo).resize(size)

)

slides[n] = Zoom(slides[n], position='top')

video = concatenate_videoclips(slides)

videoClips.append(video)

mainVideo = concatenate_videoclips(videoClips)

if add_bg_Video:

bgVideo = VideoFileClip(bg_video, has_mask=True)

video = CompositeVideoClip([mainVideo, bgVideo.set_start(2), bgVideo.set_start(22)])

else:

video = mainVideo

audio = AudioFileClip(audio_name + ".wav")

audio = audio.set_duration(video.duration).set_start(0)

video_with_audio = video.set_audio(audio)

video_with_audio.write_videofile(final_video)

del video

del mainVideo

flush()

print(f"Video {final_video} created successfully")As you can see in the previous code, it uses a “subscribe_shorts.mov” to put on top of the generated video. You can download this video file from here

Detailed Explanation:

- Loop Initialization:

- The outer loop (

for x in range(numFinalVideos):) iterates to create the specified number of final videos. imagesListandvideoClipsare initialized to store the generated images and video clips.

- The outer loop (

- Image Generation:

- For each video, the inner loop (

for y in range(numImagesPerVideo):) generates the specified number of images. - A random prompt is generated using

generatePrompts(). - The image is generated using the

pipe()function and saved with a unique name.

- For each video, the inner loop (

- Music Generation:

- The model is set up to generate background music.

- A random prompt from

music_prompt_listis selected. - Music is generated and saved as an audio file.

- Video Creation:

- The dimensions of the images are obtained to maintain consistency in video size.

slideslist stores video clips created from each image, applying theZoomeffect.concatenate_videoclipscombines these clips into a single video (mainVideo).

- Background Video:

- Optionally, a background video (

bgVideo) can be overlaid onmainVideo.

- Optionally, a background video (

- Audio Integration:

- The generated audio is added to the video (

video_with_audio). - The final video is saved as an MP4 file.

- The generated audio is added to the video (

- Clean Up:

- GPU memory is cleared to ensure smooth performance for the next iteration.

- The loop continues until all videos are generated.

By following these steps, you can create high-quality AI-generated YouTube Shorts with diverse visuals and custom background music, enhancing your content and engagement. Your generated videos should look like this one:

Conclusion

In this advanced guide, we’ve explored the process of creating automated AI videos for YouTube Shorts and Instagram reels by combining powerful tools for image and music generation, applying video effects, and integrating them into cohesive videos. By following the detailed steps and utilizing the provided code, you’ve learned how to generate diverse prompts, create images based on those prompts, generate background music, and produce automated AI videos that incorporate these elements seamlessly.

Key Takeaways:

- Diverse Prompt Generation:

- We’ve demonstrated how to use predefined options to generate random prompts, ensuring that each image and video clip is unique and engaging.

- Image and Music Generation:

- Utilizing advanced models like Stable Diffusion and Audiocraft’s MusicGen, you can generate high-quality images and music that align with your creative vision.

- Video Editing Techniques:

- Applying effects such as zoom and combining video clips into a single cohesive video, you have the skills to enhance the visual appeal of your content.

Experimentation:

- Customization of Prompts:

- Experiment with different combinations of prompt options to see how they affect the generated images. Try adding new categories or modifying existing ones to expand the diversity of your content.

- Effect Variations:

- Test different video effects beyond zoom, such as rotations, transitions, or color grading, to add variety and professionalism to your automated AI videos.

- Music Generation:

- Explore generating different genres or styles of music by modifying the prompts or trying out different models for music generation.

- Integrating Background Videos:

- Experiment with adding various background videos or animations to see how they enhance the final output. This can be useful for creating thematic content or adding a call to action, like a subscription animation.

This guide showcases the remarkable power of creating automated AI Videos and other content. By using tools like Stable Diffusion for creating stunning images, MusicGen for crafting background music, and OpenCV with MoviePy for assembling videos, you can streamline and elevate your content creation process. The beauty of this approach lies in its simplicity and efficiency—turning a few lines of code into captivating multimedia content that stands out. Whether you’re a social media enthusiast looking to generate engaging posts, a marketer aiming to enhance your campaigns, or just someone curious about the potential of AI, this method offers a practical and creative solution.

Experimenting with different prompts and settings not only brings diversity to your creations but also highlights the endless possibilities AI provides in the realm of digital content. This isn’t just about saving time; it’s about unlocking a new level of creativity and quality in your projects.

Happy Coding!